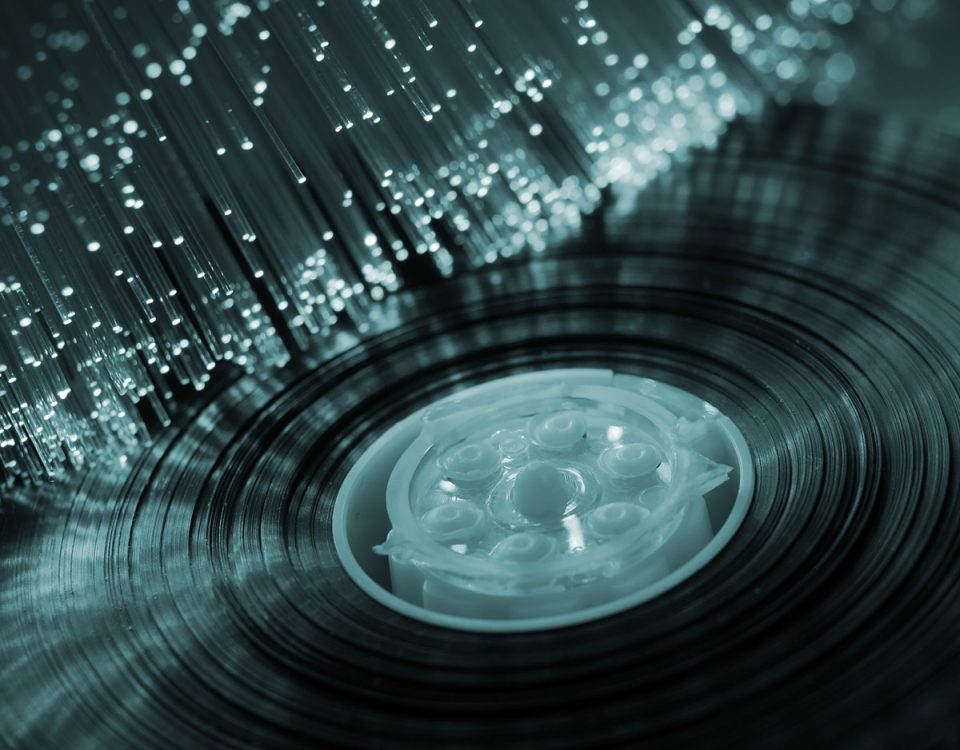

Data Erasure Software Enveloped in Mist

Commercial software for data erasure is not as “shiny” as it could be because it has been enveloped in mist due to a lack of transparency and hard data.

That has been the case since the beginning by not debunking the Guttman myth for HDD erasure, to today with the sanitization of SSD or flash memory erasure.

The NIST defines: “Sanitization is a process to render access to target data on the media infeasible for a given level of recovery effort. The level of effort applied when attempting to retrieve data may range widely.” Where are the studies with hard data to make risk assessments so that we may get insight into different data erasure technologies and their performance?

It is astonishing that in the 20 years of the existence of commercial data erasure software, so little data has been published about its performance. It is usually about a sample of hard drives bought on the market to observe that data has been found, because some were not erased and others were partly erased (probably just by reformatting). Or, a comparison of software functions, like detecting remapped sectors. For the rest, this industry has been hiding behind the certification of their solutions, making it look like the holy grail surrounded by a misty swamp.

This strategy might have been profitable for software sellers, but it has not helped decisionmakers gain understanding and enable them to make risk-based decisions for cost-effective security. The result is that there is still a lot of distrust in data erasure software as a secure media sanitization tool and a lot of inefficient usage of this technology.

For example: The DOD 5220.22-M 3-pass has been outdated for 15 years and has been retired by security authorities, but organizations still continue to prescribe this erasure method. Most experts agree that a single rewrite of an HDD is sufficient to purge or clear if the overwrite is truly random. Rewriting a disk several times increases costs by multiplying the processing time. This can easily lead to the decision that it is less expensive and faster to destroy the complete disk.

It is naïve to suppose that an organization avoids risks only by imposing a certified data software solution and storing piles of erasure reports somewhere. What it is really about is to ensure that not one single data carrier will leave the organization’s control without being sanitized. The sanitization method/solution must be in accordance with the data category and the characteristics of the data carrier, and a good verification system needs to be in place.

Emphasis on the fact that software is certified is takes other aspects out of consideration. For example, certification is time-constrained. Over time, new models of data carriers and new versions of erasure software enter the market. One can chose to stick with an old certified version of software which might not perform as required on new data carrier models, or to start using new versions of software which are not certified. It takes much too long and is too expensive to certify all new software versions and updates.

Relevant data can help security departments to identify which types of data carriers should be forensically tested after erasure. And avoiding to wait for certification which might never come.